The Safer Agentic AI Community is a global network of experts working to build practical safety guidelines for AI systems with independent decision-making capabilities. As AI becomes more autonomous, ensuring these systems remain aligned with human values is more important than ever.

WHAT IS AGENTIC AI?

Agentic AI systems can set and pursue goals, adapt to new situations, and make complex decisions on their own. They operate within defined boundaries but show initiative—breaking down tasks, experimenting, and adjusting to feedback. Examples include self-driving cars or AI systems managing logistics in real time.

OUR WORK

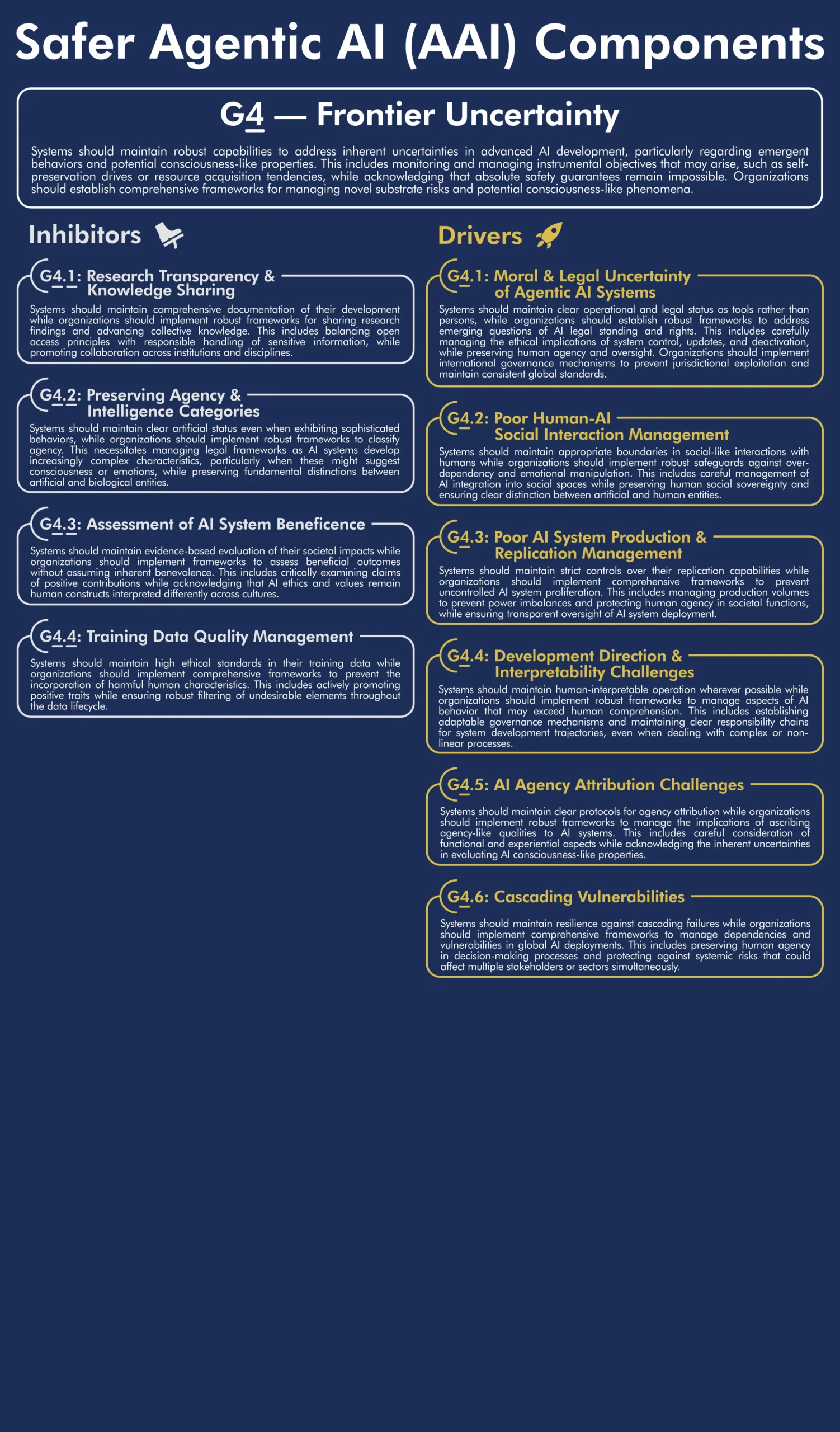

Our 25-member Working Group has released the Safer Agentic AI Foundations—a living set of recommended practices. The second volume introduces a structured framework for understanding what makes these systems safe or unsafe. Developed using a rigorous weighted-factors approach, this framework builds on years of experience creating standards and certifications for ethical AI.

IDEATION PARTICIPATION AND SUPPORT

Experts from diverse fields, including AI, technology, law, ethics, social sciences, safety engineering, systems engineering, assurance, and certification, have volunteered their time and expertise to support our ongoing ideation sessions. These contributors broadly fall into two groups: regular contributors and those who have participated less frequently. We are deeply grateful to both groups for their engagement, ideas, and contributions to the debates, concept creation, development and articulation. This process, which we term 'Concept Harvesting,' has resulted in the insights shared in this release.

REGULAR CONTRIBUTORS

- Ali Hessami

- Matthew Newman

- Sara El-Deeb

- Farhad Fassihi

- Mert Cuhadaroglu

- Scott David

- Hamid Jahankhani

- Nell Watson

- Sean Moriarty

- Isabel Caetano

- Roland Pihlakas

- Vassil Tashev

- Keeley Crockett

- Safae Essafi

- Zvikomborero Murahwi

- Lubna Dajani

- Salma Abbasi

OCCASIONAL CONTRIBUTORS

- Aisha Gurung

- Leonie Koessler

- Pramod Misra

- Aleksander Jevtic

- McKenna Fitzgerald

- Pranav Gade

- Alina Holcroft

- Michael O'Grady

- Rebecca Hawkins

- Md Atiqur R. Ahad

- Mrinal Karvir

- Sai Joseph

- Chantell Murphy

- Nikita Tiwari

- Tim Schreier

- Katherine Evans

- Patricia Shaw

Our community unites specialists from AI, technology, ethics, law, social sciences, and beyond. Together, we focus on designing future-ready frameworks and criteria that uphold ethical principles and practical safety measures in real-world deployments.

Our experts have significantly influenced internationally recognized standards and frameworks—such as the IEEE 7000 series and ECPAIS Transparency, Accountability, Fairness, Privacy and Algorithmic Bias Certification—while also advancing new AI ethics initiatives.

SUBSCRIBE FOR UPDATES

Stay informed about the latest developments in our Safer Agentic AI research and frameworks.

GET INVOLVED

Join our growing community of practitioners committed to ensuring the safe and beneficial development of agentic AI systems.

HAVE ANY QUESTIONS OR IDEAS?

Use the form below to get in touch with us.

We welcome feedback, questions, and collaborative opportunities.